It is well-established that many psychiatric disorders initially emerge during the formative time periods of childhood and adolescence (Kessler et al., 2005; Paus, Keshavan, & Giedd, 2008), when the brain is consistently subject to growth and experience-related changes. This applies not only to classic neurodevelopmental disorders like attention deficit hyperactivity disorder (ADHD) but also to psychiatric disorders like depression or obsessive-compulsive disorder (OCD), which are often attributed to adulthood (Hauser, Will, Dubois, & Dolan, 2019). According to evolutionary models, there is a lot of variation in an individual’s development during these sensitive periods (Frankenhuis & Fraley, 2017). It is, however, far less clear how exactly this process of brain development makes some individuals more vulnerable to psychiatric disorders (Hauser et al., 2019). One thing we can do as researchers is study the developmental trajectories in people with psychiatric disorders and relate them to fundamental cognitive processes using “computational modelling”, in order to explain how and possibly why certain thought processes deviate in patients (Hauser et al., 2019). The goal of so-called theory-driven computational approaches is essentially to capture and characterize psychiatric and neurodevelopmental disorders quantitatively, in order to then attain a better understanding of the mechanisms at play.

Reinforcement Learning

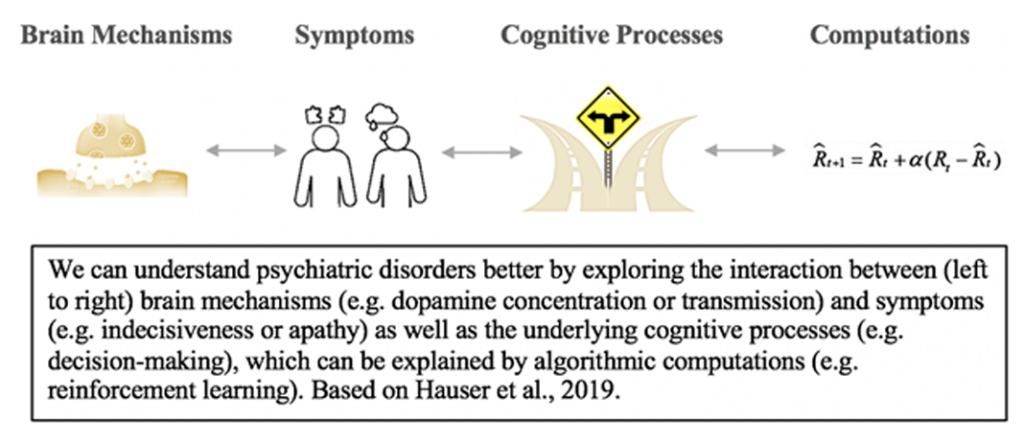

A powerful tool in computational psychiatry is algorithmic modelling, specifically prediction-error based reinforcement learning, because it can link underlying neural brain mechanisms to complex cognitive processes and behavioural symptoms (Hauser et al., 2019). Reinforcement learning (RL) models first emerged when scientists acknowledged that learning, in its simplest form occurs when the expected outcome (e.g. reward) differs from the received outcome. This is what results in a so-called prediction errors, which is a negative value if we receive less than expected and positive if the experienced outcome exceeds the expected (Bush & Mosteller, 1951; Rescorla & Wagner, 1972; Sutton & Barto, 1981). Based on this prediction error (PE) we learn about the environment and constantly update our predictions and decisions in an attempt to maximize future PE signals (Sutton & Barto, 1981, 1998).

Importantly, this cognitive and behavioural process has been linked to the brain, as the PE computations underlying reward learning were found to correlate directly with the release of the neurotransmitter dopamine (Montague, Dayan, & Sejnowski, 1996; Schultz, Dayan, & Montague, 1997). Since then, electrophysiological studies in humans ((Zaghloul et al., 2009), non-human primates (Tanaka, O’Doherty, & Sakagami, 2019) and rodents (Takahashi, Langdon, Niv, & Schoenbaum, 2016) have confirmed and convincingly established dopamine as a robust neural correlate of the PE parameter in reinforcement learning models (Nasser, Calu, Schoenbaum, & Sharpe, 2017).

Another important feature of RL algorithms is the ability to infer unknown aspects of the environment, which is a more complex form of decision-making known as model-based reasoning (Hauser et al., 2019). Several studies have shown that this crucial process is constrained by development, meaning it develops in adolescence but only fully matures in adulthood (Decker, Otto, Daw, & Hartley, 2016; Potter, Bryce, & Hartley, 2017) and that it is impaired, for example, in patients with OCD (Gillan, Kosinski, Whelan, Phelps, & Daw, 2016; Voon et al., 2015). Meanwhile in patients with attention-deficit/hyperactivity disorder (ADHD), the processing of dopaminergic PE signals seems to be deficient during adolescence already and thereby decision-making and learning abilities are impaired early in development (Hauser et al., 2014).

The neural and algorithmic mechanisms associated with dopaminergic PE signals are also involved in other forms of learning such as those related to social approval, with acceptance from others functioning as social reinforcement (Jones et al., 2011) or self-esteem, where prediction errors arise from differences between expected and received social confirmation — impairments of these mechanisms may be a marker for psychiatric vulnerability (Will, Rutledge, Moutoussis, & Dolan, 2017). But making the measurement of social approval and self-esteem as objective and consistent as possible remains a challenge. Also, beyond reward learning, dopaminergic PE signals were found to encode effort learning, which was processed in dorsomedial prefrontal brain regions (Hauser, Eldar, & Dolan, 2017) that have also been related to apathy, as loss of motivation to exert effort in order to obtain rewards (Chong, 2018; Marin, 1991). Apathy is present in and even considered a crucial determinant in many psychiatric disorders that typically emerge during adolescence, such as depression and schizophrenia (Green, Horan, Barch, & Gold, 2015). Similarly, social approval and self-esteem play a critical role in psychiatric anxiety and depressive disorders with a neurodevelopmental origin (Orth & Robins, 2013; Sowislo & Orth, 2013).

Beyond what behavioural and subjective self-assessments can uncover about the mechanisms underlying this, computational psychiatry, with the help of algorithmic models, has revealed that unhealthy self-esteem updates were based on the neural processing of over-weighted social PE signals during social learning (Hauser et al., 2019). This means that the internalisation of social feedback and rejection, which individuals are most prone to incorporate into their self-image during adolescence, increases the risk of developing such psychiatric disorders (Davey, Yücel, & Allen, 2008). Crucially, the stability of self-esteem also varies across individuals and predicts how responsive they are to certain treatment and therapy options (Roberts, Shapiro, & Gamble, 1999). It is clear that computational psychiatry is contributing to our mechanistic understanding of disorders, but at the same time this is always constrained by the quality of the respective models, the data they capture and the cases they generalise to (Hauser et al., 2019). In practice, models often struggle with considerable levels of uncertainty regarding the estimation of its parameters or the issue of noise in data and the question of how best to deal with it. All too often felonies are unknowingly committed, for instance when trying to make models too complex but then overfitting them with variability from noise (van den Bos, Bruckner, Nassar, Mata, & Eppinger, 2018).

Though what is more relevant to the clinical practice of psychiatry, is the selection of the best suited treatment option for a patient. For this purpose, a computational approach can be used to look for relevant factors and interactions between treatments, based on trial data in which patients were randomly assigned different treatment options (Huys et al., 2016). Interestingly, this has revealed that demographic factors like marriage and employment as well as a record of previously unsuccessful antidepressant trials can accurately predict that cognitive-behavioural therapy would be a better treatment choice, whereas a comorbidity with personality disorders makes antidepressants more appropriate (DeRubeis et al., 2014).

As a consequence of the better treatment selection achieved with such models, a significant and measurable reduction on the Hamilton Rating Scale for Depression was attained, considerably beyond that following traditional treatment selections (National Collaborating Centre for Mental Health, 2010). However, a main point of criticism from psychiatrists has been the concern that complicated and highly theoretical computational models are neither very intuitive for clinicians to understand nor sufficient to replace the human component and multifaceted approach that professional psychiatrists with years of practical experience can provide their patients with (Huys et al., 2011).

Conclusion

Theory-driven computational approaches depend on the availability of prior knowledge and reliable behavioural, neural or biophysical measurements, in order to extract the most relevant parameters from psychiatric disorders. Based on these, data-driven approaches can then provide better potential targets for investigating future treatment recommendations. Of course, computational models are limited by the current boundaries and insights from psychiatric research as well as the inherent difficulty of measuring certain variables. But rigorous hypothesis testing can be performed on complex data by using advanced quantitative methods to determine which model provides the best explanation, while at the same time producing qualitative and clinically useful predictions (Huys et al., 2011). A developmental perspective is also very insightful for this purpose, because it reveals when and how certain disorders emerge in the first place. This makes it possible to link the impairments and general mechanisms underlying psychiatric disorders to specific differences in biographical or environmental influence and brain development, in order to develop more targeted science based treatments or potentially even new more effective and focused prevention.

References

Bush, R. R., & Mosteller, F. (1951). A mathematical model for simple learning. Psychological Review, 58(5), 313–323. https://doi.org/10.1037/h0054388

Chong, T. T. J. (2018, August 1). Updating the role of dopamine in human motivation and apathy. Current Opinion in Behavioral Sciences, Vol. 22, pp. 35–41. https://doi.org/10.1016/j.cobeha.2017.12.010

Davey, C. G., Yücel, M., & Allen, N. B. (2008, January 1). The emergence of depression in adolescence: Development of the prefrontal cortex and the representation of reward. Neuroscience and Biobehavioral Reviews, Vol. 32, pp. 1–19. https://doi.org/10.1016/j.neubiorev.2007.04.016

Decker, J. H., Otto, A. R., Daw, N. D., & Hartley, C. A. (2016). From Creatures of Habit to Goal-Directed Learners: Tracking the Developmental Emergence of Model-Based Reinforcement Learning. Psychological Science, 27(6), 848–858. https://doi.org/10.1177/0956797616639301

DeRubeis, R. J., Cohen, Z. D., Forand, N. R., Fournier, J. C., Gelfand, L. A., & Lorenzo-Luaces, L. (2014). The Personalized Advantage Index: Translating Research on Prediction into Individualized Treatment Recommendations. A Demonstration. PLoS ONE, 9(1), e83875. https://doi.org/10.1371/journal.pone.0083875

Frankenhuis, W. E., & Fraley, R. C. (2017). What Do Evolutionary Models Teach Us About Sensitive Periods in Psychological Development? Https://Doi.Org/10.1027/1016-9040/A000265. https://doi.org/10.1027/1016-9040/A000265

Gillan, C. M., Kosinski, M., Whelan, R., Phelps, E. A., & Daw, N. D. (2016). Characterizing a psychiatric symptom dimension related to deficits in goaldirected control. ELife, 5(MARCH2016). https://doi.org/10.7554/eLife.11305

Green, M. F., Horan, W. P., Barch, D. M., & Gold, J. M. (2015). Effort-Based Decision Making: A Novel Approach for Assessing Motivation in Schizophrenia. Schizophrenia Bulletin, 41(5), 1035–1044. https://doi.org/10.1093/schbul/sbv071

Hauser, T. U., Eldar, E., & Dolan, R. J. (2017). Separate mesocortical and mesolimbic pathways encode effort and reward learning signals. Proceedings of the National Academy of Sciences of the United States of America, 114(35), E7395–E7404. https://doi.org/10.1073/pnas.1705643114

Hauser, T. U., Iannaccone, R., Ball, J., Mathys, C., Brandeis, D., Walitza, S., & Brem, S. (2014). Role of the medial prefrontal cortex in impaired decision making in juvenile attention-deficit/hyperactivity disorder. JAMA Psychiatry, 71(10), 1165–1173. https://doi.org/10.1001/jamapsychiatry.2014.1093

Hauser, T. U., Will, G. J., Dubois, M., & Dolan, R. J. (2019). Annual Research Review: Developmental computational psychiatry. Journal of Child Psychology and Psychiatry and Allied Disciplines, 60(4), 412–426. https://doi.org/10.1111/jcpp.12964

Huys, Q. J. M., Moutoussis, M., & Williams, J. (2011). Are computational models of any use to psychiatry? Neural Networks, 24, 544–551. https://doi.org/10.1016/j.neunet.2011.03.001

Huys, Q., Maia, T., & Frank, M. (2016, February 23). Computational psychiatry as a bridge from neuroscience to clinical applications. Nature Neuroscience, Vol. 19, pp. 404–413. https://doi.org/10.1038/nn.4238

Jones, R. M., Somerville, L. H., Li, J., Ruberry, E. J., Libby, V., Glover, G., … Casey, B. J. (2011). Behavioral and neural properties of social reinforcement learning. Journal of Neuroscience, 31(37), 13039–13045. https://doi.org/10.1523/JNEUROSCI.2972-11.2011

Kessler, R. C., Berglund, P., Demler, O., Jin, R., Merikangas, K. R., & Walters, E. E. (2005, June 1). Lifetime prevalence and age-of-onset distributions of DSM-IV disorders in the national comorbidity survey replication. Archives of General Psychiatry, Vol. 62, pp. 593–602. https://doi.org/10.1001/archpsyc.62.6.593

Marin, R. S. (1991). Apathy: A neuropsychiatric syndrome. Journal of Neuropsychiatry and Clinical Neurosciences, Vol. 3, pp. 243–254. https://doi.org/10.1176/jnp.3.3.243

Montague, P. R., Dayan, P., & Sejnowski, T. J. (1996). A framework for mesencephalic dopamine systems based on predictive Hebbian learning. Journal of Neuroscience, 16(5), 1936–1947. https://doi.org/10.1523/jneurosci.16-05-01936.1996

Nasser, H. M., Calu, D. J., Schoenbaum, G., & Sharpe, M. J. (2017, February 22). The dopamine prediction error: Contributions to associative models of reward learning. Frontiers in Psychology, Vol. 8, p. 244. https://doi.org/10.3389/fpsyg.2017.00244

Orth, U., & Robins, R. W. (2013). Understanding the Link Between Low Self-Esteem and Depression. Current Directions in Psychological Science, 22(6), 455–460. https://doi.org/10.1177/0963721413492763

Paus, T., Keshavan, M., & Giedd, J. N. (2008, December 12). Why do many psychiatric disorders emerge during adolescence? Nature Reviews Neuroscience, Vol. 9, pp. 947–957. https://doi.org/10.1038/nrn2513

Potter, T. C. S., Bryce, N. V., & Hartley, C. A. (2017). Cognitive components underpinning the development of model-based learning. Developmental Cognitive Neuroscience, 25, 272–280. https://doi.org/10.1016/j.dcn.2016.10.005

Rescorla, R. A., & Wagner, A. R. (1972). A Theory of Pavlovian Conditioning: Variations in the Effectiveness of Reinforcement and Nonreinforcement. Classical Conditioning II: Current Research and Theory, 2, 1972.

Roberts, J. E., Shapiro, A. M., & Gamble, S. A. (1999). Level and perceived stability of self-esteem prospectively predict depressive symptoms during psychoeducational group treatment. British Journal of Clinical Psychology, 38(4), 425–429. https://doi.org/10.1348/014466599162917

Schultz, W., Dayan, P., & Montague, P. R. (1997). A neural substrate of prediction and reward. Science, 275(5306), 1593–1599. https://doi.org/10.1126/science.275.5306.1593

Sowislo, J. F., & Orth, U. (2013). Does low self-esteem predict depression and anxiety? A meta-analysis of longitudinal studies. Psychological Bulletin, 139(1), 213–240. https://doi.org/10.1037/a0028931

Sutton, R. S., & Barto, A. G. (1981). Toward a modern theory of adaptive networks: Expectation and prediction. Psychological Review, 88(2), 135–170. https://doi.org/10.1037/0033-295X.88.2.135

Sutton, R. S., & Barto, A. G. (1998). Reinforcement Learning: An Introduction.

Takahashi, Y. K., Langdon, A. J., Niv, Y., & Schoenbaum, G. (2016). Temporal Specificity of Reward Prediction Errors Signaled by Putative Dopamine Neurons in Rat VTA Depends on Ventral Striatum. Neuron, 91(1), 182–193. https://doi.org/10.1016/j.neuron.2016.05.015

Tanaka, S., O’Doherty, J. P., & Sakagami, M. (2019). The cost of obtaining rewards enhances the reward prediction error signal of midbrain dopamine neurons. Nature Communications, 10(1), 1–13. https://doi.org/10.1038/s41467-019-11334-2

van den Bos, W., Bruckner, R., Nassar, M. R., Mata, R., & Eppinger, B. (2018, October 1). Computational neuroscience across the lifespan: Promises and pitfalls. Developmental Cognitive Neuroscience, Vol. 33, pp. 42–53. https://doi.org/10.1016/j.dcn.2017.09.008

Voon, V., Baek, K., Enander, J., Worbe, Y., Morris, L. S., Harrison, N. A., … Daw, N. (2015). Motivation and value influences in the relative balance of goal-directed and habitual behaviours in obsessive-compulsive disorder. Translational Psychiatry, 5(11), e670–e670. https://doi.org/10.1038/tp.2015.165

Will, G. J., Rutledge, R. B., Moutoussis, M., & Dolan, R. J. (2017). Neural and computational processes underlying dynamic changes in self-esteem. ELife, 6. https://doi.org/10.7554/eLife.28098

Zaghloul, K. A., Blanco, J. A., Weidemann, C. T., McGill, K., Jaggi, J. L., Baltuch, G. H., & Kahana, M. J. (2009). Human substantia nigra neurons encode unexpected financial rewards. Science, 323(5920), 1496–1499. https://doi.org/10.1126/science.1167342